The new policy sets precedence with the first-ever legal framework for the new technology

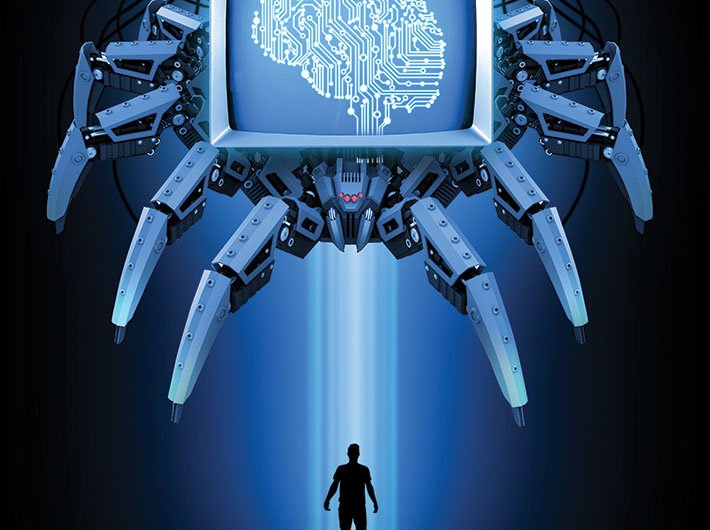

The recent European Union (EU) policy on artificial intelligence (AI) will be a game-changer and likely to become the de-facto standard not only for the conduct of businesses but also for the way consumers think about AI tools. Governments across the globe have been grappling with the rapid rise of AI tools and the potential risks they pose, ranging from misinformation to fraud, and data privacy hazards. The EU ordinance will serve as a launch pad for the start-ups and researchers, who are participating in the global race for what citizens desire – basically ‘A trustworthy AI’.

The landmark and first-ever legal framework for AI was unveiled in March as the EU lawmakers approved the world’s first legal framework on AI. The Act seems to balance the safety and reliability of AI usage for people across the region. Their objective was “to deliver legislation that would ensure that the ecosystem of AI in Europe would develop with the human-centric approach respecting fundamental rights and European values”. It is reported that the EU is setting up its own AI office in Brussel that will monitor particularly big AI systems and each of the 27 member-nations in the EU is naming its own AI watchdog where people will be able to file a complaint. In an era of technological revolution, the EU’s AI policy stands as a beacon of responsible innovation and ethical governance.

Margrethe Vestager, the executive vice president, the EU Commission, is frequently asked, “Why is AI being regulated? It will hamper the innovation”. Her reply is: “I hope by now everyone realises that this is the important moment, the time is right now!”

The past stands as a stark reminder of the colossal damage that has been endured. The inability to regulate social media has been a major setback, highlighting the urgent need to take measures to prevent such failures from happening again. ‘Cambridge Analytica’, ‘CubeYou’ etc. are just a few of such cases but also platforms like TikTok that has completely made the younger generation the pudding-heads. Even in the present day technology that is just 10 percent of the magnitude, the power of artificial general intelligence opens portals for such cases, demanding an urgent need to regulate.

What will it change for people living in EU?

It won’t change anything immediately but its effects would be seen over the next couple of years.The law is going to take effect gradually over the next two-three years and along the lines its effect would be felt. So, for example, if a chatbot like Chat-GPT is used, there would have to be an essential disclaimer saying ‘ok, this is an AI system or a machine you are interacting with’. The same is true for other sectors like BPOs and KPOs too. Though some applications are not being regulated (for example, in toys it is not regulated) that could attack the thoughts and behaviour of toddlers so those sides definitely need attention. The EU AI Act has set precedence for nations’ struggling to control AI rapid advancements. The underlying principle is simple, ‘the riskier the AI system, the higher the threshold for the regulation’.

Does it have any impact outside the EU?

Looking forward to what is called Brussel’s effect. Essentially, the idea is that the AI-related enacted laws will act as a trigger to motivate by giving a blueprint to the rest of the world’s legislators. Embracing similar policies can enable other nations to harness the transformative potential of AI while ensuring a level playing field for businesses worldwide.

As the use of AI becomes more widespread, one must be aware of the potential dangers it poses. Adversaries can use AI to create deep fakes and synthetic media, leading to financial loss, identity theft, reputational damage, and manipulation of public opinion. AI can also be used for cyberattacks, including brute force, denial of service, and social engineering. These risks are expected to increase as AI becomes easily accessible. With AI integration in our daily lives, privacy concerns will remain crucial.

Thus, it remains significant to address and prioritize regulations to mitigate risks. As the reverberations of doing nothing in this regard could be bitterly cold.

Will the regulations stifle innovation?

One should look at it from the viewpoint that it will enable innovation by establishing clear boundaries of what is allowed and what is not allowed. For other nations, this is the pivotal time to regulate AI but how to do it, will be concerning question.

How fast can rules be enforced?

Just passing laws won’t work; enforcement of the same remains crucial. But as they say, ‘where there’s a will there is a way’.

The EU AI Act is going to be the litmus test for next couple of years. The Act has definitely set a new standard for regulating AI. However, the fear of eroding the newness looms large. Imagine a world where tools are regulated, consumers can compare them with ease. This is possible with the introduction of mandatory symbols, similar to Meta verified, for regulated tools. Companies using AI technology must take steps to assess and reduce risks, maintain use logs, meet transparency and accuracy standards, ensure human oversight, and provide individuals a way to submit complaints about AI systems. They must also provide explanations about decisions based on high-risk AI uses that affect people's rights. Google's recent initiative, AI Cyber defence to reverse ‘Defender’s Dilemma’, is a step in the right direction, enhancing security for AI applications. Another possibility is using blockchain to store and distribute AI models.

Furthermore, alongside companies implementing essential measures to enhance the security of their AI tools, it's imperative for governments to take the lead by establishing regulations that all domestically developed AI tools must adhere to beforehand. Given the interconnected nature of AI, international collaborations is crucial to tackle evolving challenges and capitalize on the shared opportunities. This joint effort would not only encourage innovation but will also promote a cohesive global approach to AI governance.

As technology evolves, so are the data breaches and cyber-attacks. It’s too easy for cyberpunks to find new security flaws based on some low hanging fruits and use it to their advantage. This saga is emblematic of a much bigger problem in cybersecurity. What we can do here is not to limit the technological novelty but to make laws and entry points stern & taut by introducing regulations, to make it difficult to trespass.

Dr. Megha Jain is Assistant Professor, Shyam Lal College, University of Delhi. Vanyaa Gupta is a Delhi-based writer. Dr. Tinu Jain is Assistant Professor, IMI Kolkata, West Bengal, India.

Views are personal.